Contributed by: María Óskarsdóttir, Bart Baesens

This article first appeared in Data Science Briefings, the DataMiningApps newsletter. Subscribe now for free if you want to be the first to receive our feature articles, or follow us @DataMiningApps. Do you also wish to contribute to Data Science Briefings? Shoot us an e-mail over at briefings@dataminingapps.com and let’s get in touch!

Numerous websites that offer services or sell products are equipped with a rating system where users can rate and write descriptions about the services they have received or items they have bought. People rely on these online rating systems to a great extent when they need to make choices such as which movie to watch, where to go on holiday and which candidate to vote for. As a result, the systems have a great economic value. But are the rating systems reliable? Do they accurately represent the true quality of the products or is the information we receive biased by social influence, herding effects and differentiation?

To test this potential bias, Muchnik, Aral, and Taylor (2013) set up a randomized experiment on a news aggregation web site. On this web site, users can share news articles, comment on them and up- or down-vote the comments of others. By aggregating the number of up- and down-votes, each comment receives a rating which is displayed next to it. This serves as a first impression of the quality of the comments when the user scrolls through them. In addition, on this web site, users have the possibility to like and dislike other users, thus forming networks of friends and enemies.

The experiment was carried out over five months, during which time a number of randomly selected comments were treated before being displayed on the website, either by (1) up-voting them (up-treated), (2) down-voting them (down-treated) or by (3) doing nothing (control).

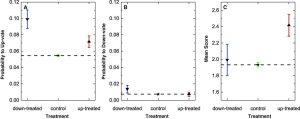

Figure 1: Effect of manipulation on voting behavior. The positively manipulated treatment group (up-treated), the negatively manipulated treatment group (down-treated), and the control group (dotted line) are shown. Adapted from Muchnik et al. (2013, p. 648)

The three figures in Figure 1 show the main findings of the experiment. The figure on the left shows the probability of a down-treated (blue), control (dotted line) and up-treated (red) comment to be up-voted by the first viewer. The figure in the middle shows the same for the probability of being down-voted by the first viewer. As the dotted line in the two sub figures shows, it is more likely for a control comment to be up-voted (5.15%) by the first viewer than down-voted (0.82%). When looking at the effects on the treated comments, it can be seen that up-treated comments are 32% more likely to be up-voted than the control, but are not more likely to be down voted. Down-treated votes, on the other hand, are very likely to be up-voted and down-voted, both probabilities being significantly different form the control. The figure on the right in Figure 1 shows the cumulative score of the comments over the span of five months. Clearly, the up-treated comments receive a significantly higher score than the control, whereas the down-treated do not.

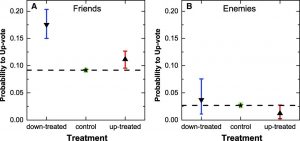

When taking into account friendships on the website, the result in Figure 2 are obtained. The two sub-figures display the probability to up-vote a comment of a friend and an enemy. According to these results, users are more likely to up-vote both the upand down-treated comments of their friends, whereas for enemies, the probabilities are not significantly different from the control. This displays a clear influence of friendship on up-voting.

Figure 2: Effects of friendship on rating behavior. The figure shows the probability of a friend (A) and enemy (B) of the commenter to up-vote positively manipulated, negatively manipulated, and control group comments. Adapted from Muchnik et al. (2013, p. 649)

In the paper, the authors discuss and analyse two data-driven processes that can explain the evident herding of positive ratings, namely selective turn-out and opinion change. Selective turn-out occurs when people who usually don’t vote on comments, do it because they see a comment that has been treated. Opinion change is when a treated comment causes someone who would vote in one way to change their mind the vote in the opposite way. In summary, the herding effect is a combination of users changing their opinion, the general tendency to up-vote and greater turnout for both up- and down-treated comments.

The concept of social influence on the internet is clearly important and research on it has boomed in the last few years. One of those studies, investigates the social and structural moderators of peer influence in networks, showing that both embeddedness and tie strength increase influence but physical interaction does not have an effect.(Aral & Walker, 2014). Furthermore, Lee, Hosanagar, and Tan (2015) investigated the differential impact of prior ratings by crowds as opposed to ratings of friends when selecting a movie to watch. Their results imply that the rating of one’s friends always induces herding but rating of crowds shows evidence of either herding or differentiation depending on whether the movie is popular or non-popular, respectively.

There is evidence of bias in the form of herding of positive ratings in online rating systems, as the above mentioned studies show. Further research on these systems is needed to better understand them and to be able to build unbiased systems that are less sensitive to social influence and can accurately capture the true quality of the products they are set to rate.

References

- Aral, S., & Walker, D. (2014). Tie strength, embeddedness, and social influence: A large-scale networked experiment. Management Science, 60(6), 1352–1370.

- Lee, Y.-J., Hosanagar, K., & Tan, Y. (2015). Do i follow my friends or the crowd? information cascades in online movie ratings. Management Science, 61(9), 2241– 2258.

- Muchnik, L., Aral, S., & Taylor, S. J. (2013). Social influence bias: A randomized experiment. Science, 341(6146), 647–651.