Contributed by: Michael Reusens, Wilfried Lemahieu, Bart Baesens

This article first appeared in Data Science Briefings, the DataMiningApps newsletter. Subscribe now for free if you want to be the first to receive our feature articles, or follow us @DataMiningApps. Do you also wish to contribute to Data Science Briefings? Shoot us an e-mail over at briefings@dataminingapps.com and let’s get in touch!

In this short article, we present a brief introduction to deep learning. We will discuss its prominent algorithms and applications, and main drawbacks that are slowing down adoption.

In order to capture simple patterns in data, basic modelling techniques (also called shallow modelling techniques) such as SVM’s and logistics regression are usually performing well. However, as the complexity of the input data increases, artificial neural networks (ANN’s) tend to be more performant in capturing the underlying patterns in the data. For very complex input data (such as images, unstructured text and audio) ANN’s with a small number of hidden layers (also called shallow ANN’s) become insufficiently accurate. For this type of data, deep ANN’s, which are ANN’s with more hidden layers, are currently the most performant algorithms.

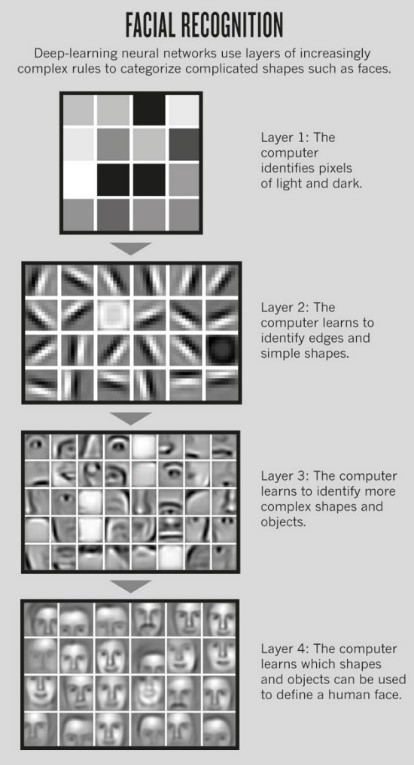

Deep ANN’s are just one class of algorithms in the broader category covered by deep learning. Many definitions of deep learning exist (Deng & Yu, 2014; Schmidhuber, 2015), however, most definitions agree that deep learning is defined as a branch of machine learning that covers the set of algorithms that model complex patterns by feeding data through multiple non-linear transformations causing each level to capture a different level of abstraction. A nice example of the hierarchical levels of abstraction in deep learning can be found in the domain of face recognition. In face recognition, the task is to automatically detect faces of specific people on images. The first abstraction level of a typical deep learning face recognition model is the raw input data, usually the individual pixels of the image, followed by edges and contrasting colors. A combination of edges and color contrasts can then constitute facial features, such as eyes, a nose, a mouth,… and finally, a combination of facial features can constitute a specific face. These different levels of abstractions are also shown in Figure 1.

Figure 1: Different level of abstraction in facial recognition. Image From: Andrew Ng

Source: http://www.nature.com/news/computer-science-the-learning-machines-1.14481

Most deep learning techniques are extensions or adaptions of ANN’s, called deep nets. Different configurations of deep nets are suitable for different machine learning tasks: Restricted Boltzman Machines (RBM’s) (Smolensky 1986; Hinton & Salakhutdinov, 2006) and Autoencoders (Vincent, Larochelle, Bengio, & Manzagol ,2008) are the main deep learning techniques for finding patterns in unlabeled data. This includes tasks such as feature extraction, pattern recognition and other unsupervised learning settings. Recurrent nets (Graves et al. , 2009. ; Sak, Senior & Beaufays, 2014) are the state of the art in text processing (sentiment analysis, text parsing, named entity recognition,…), speech recognition and time series analysis. Deep believe nets (Hinton, Osindero & The, 2006; Taylor, Hinton & Roweis, 2006) and convolutional nets (Lee, Grosse, Ranganath & Ng, 2009) are today’s best choice for imagine recognition. Deep believe networks are also state of the art in classification, together with multilayered perceptrons with rectified linear units (MLP/RELU).

In many of the aforementioned tasks, deep nets are outperforming their shallow counterparts. However, algorithms required to capture complex patterns tend to have two main drawbacks: lack of scrutability and large training time-complexity. The scrutability of models and their predictions refers to how understandable they are. For example, if a deep net would classify a person as someone likely to default on a loan it is very hard to find out what interplay of features describing the person are the reason of the classification. Models with very low scrutability are also called black box techniques. The opposite of black box techniques are white box techniques. These are the techniques of which the reasoning is more easy to understand. One of the “whitest” white box techniques are decision trees. They are easily inspectable, and classifications made by decision trees are trivially understandable by manually traversing the tree. In domains that require an understanding of why certain predictions are made by its models, black box techniques such as deep nets are often unacceptable. However, extracting rules from neural networks, and thereby deblackboxing them, is a current interesting research domain that can mitigate this drawback and make deep nets applicable in domains in which scrutability is a requirement (Baesens, Setiono, Mues & Vanthienen, 2003).

The training time-complexity drawback stems from the fact that using many layers of interconnected nodes requires many connection-weights to be optimized and a large amount of training data to do so. Training algorithms that are applicable when training shallow ANN’s, such as backpropagation (Rumelhart, Hinton & Williams, 1988), become unusable for deep nets in terms of training time and model performance. In the current state of the art of training algorithms, running the algorithms on multiple high-performance GPU’s (Graphical Processing Units), training a deep net can take weeks in complex cases.

Nevertheless, as research on deep learning advances, processing power of GPU’s increases, and research on deblackboxing neural networks advances, these drawbacks could soon be mitigated, making deep learning something to look out for in the future of many machine learning tasks.

If all this seemed a little too much too quick for you, we would love to point you to the following YouTube channel: https://www.youtube.com/channel/UC9OeZkIwhzfv-_Cb7fCikLQ. This channel introduces all the concepts mentioned above on an intuitive level that will make you understand the connections between all concepts introduced in this blogpost.

References and Further Reading

- Baesens, B., Setiono, R., Mues, C., & Vanthienen, J. (2003). Using neural network rule extraction and decision tables for credit-risk evaluation.Management science, 49(3), 312-329.

- Deng, L., & Yu, D. (2014). Foundations and Trends in Signal Processing.Signal Processing, 7, 3-4.

- Graves, A., Liwicki, M., Fernández, S., Bertolami, R., Bunke, H., & Schmidhuber, J. (2009). A novel connectionist system for unconstrained handwriting recognition. Pattern Analysis and Machine Intelligence, IEEE Transactions on, 31(5), 855-868.

- Hinton, G. E., Osindero, S., & Teh, Y. W. (2006). A fast learning algorithm for deep belief nets. Neural computation, 18(7), 1527-1554.

- Hinton, G. E., & Salakhutdinov, R. R. (2006). Reducing the dimensionality of data with neural networks. Science, 313(5786), 504-507.

- Lee, H., Grosse, R., Ranganath, R., & Ng, A. Y. (2009). Convolutional deep belief networks for scalable unsupervised learning of hierarchical representations. In Proceedings of the 26th Annual International Conference on Machine Learning (pp. 609-616). ACM.

- Rumelhart, D. E., Hinton, G. E., & Williams, R. J. (1988). Learning representations by back-propagating errors. Cognitive modeling, 5(3), 1.

- Sak, H., Senior, A. W., & Beaufays, F. (2014). Long short-term memory recurrent neural network architectures for large scale acoustic modeling. In INTERSPEECH (pp. 338-342).

- Schmidhuber, J. (2015). Deep learning in neural networks: An overview. Neural Networks, 61, 85-117.

- Smolensky, P. (1986). Chapter 6: Information Processing in Dynamical Systems: Foundations of Harmony Theory. Processing of the Parallel Distributed: Explorations in the Microstructure of Cognition, Volume 1: Foundations.

- Taylor, G. W., Hinton, G. E., & Roweis, S. T. (2006). Modeling human motion using binary latent variables. In Advances in neural information processing systems (pp. 1345-1352).

- Vincent, P., Larochelle, H., Bengio, Y., & Manzagol, P. A. (2008). Extracting and composing robust features with denoising autoencoders. InProceedings of the 25th international conference on Machine learning (pp. 1096-1103). ACM.